bagging machine learning explained

Lets assume we have a sample dataset of 1000. This blog will explain Bagging and Boosting most simply and shortly.

Bagging Classifier Python Code Example Data Analytics

Why Bagging is important.

. To leave a comment for the author please follow the link and. Decision trees have a lot of similarity and co-relation in their. Ad Whether open-mouth or FFS bagging machines we find the best solution to pack your product.

If the classifier is stable and. With over 40 years of experience we are one of the leading suppliers for bagging machines. In case you want to know more about the ensemble model the important techniques of ensemble models.

Bagging explained step by step along with its math. Machine Learning Models Explained. What are the pitfalls with bagging algorithms.

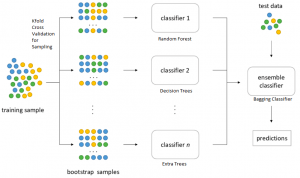

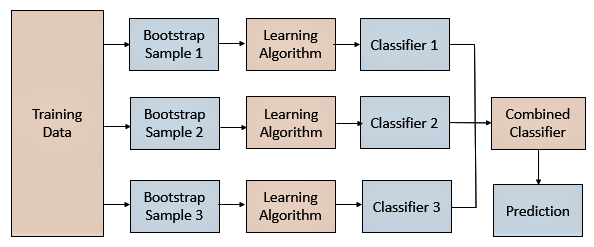

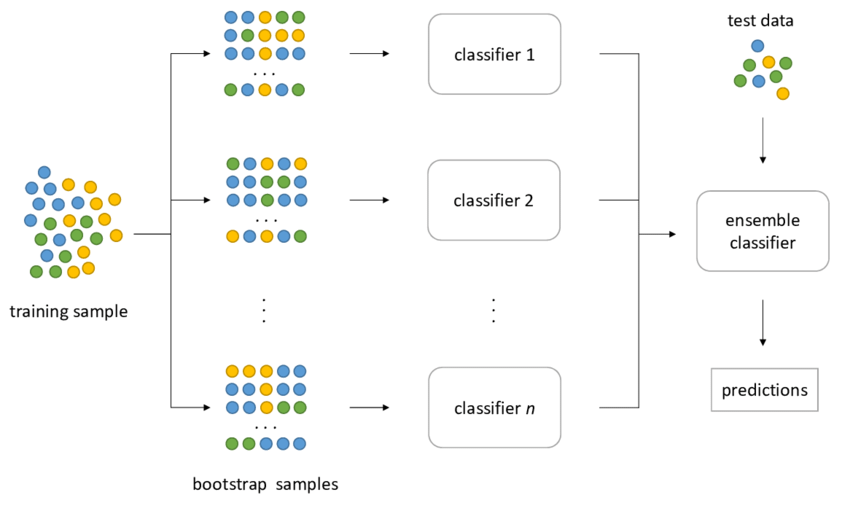

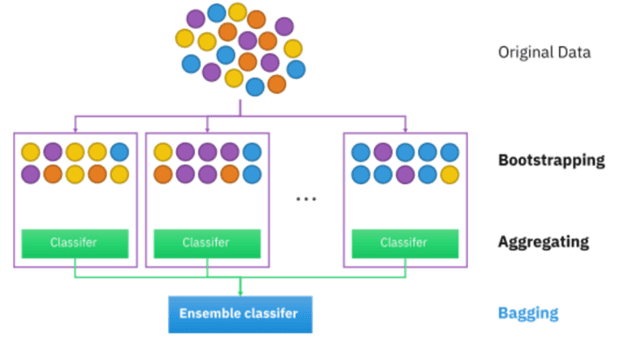

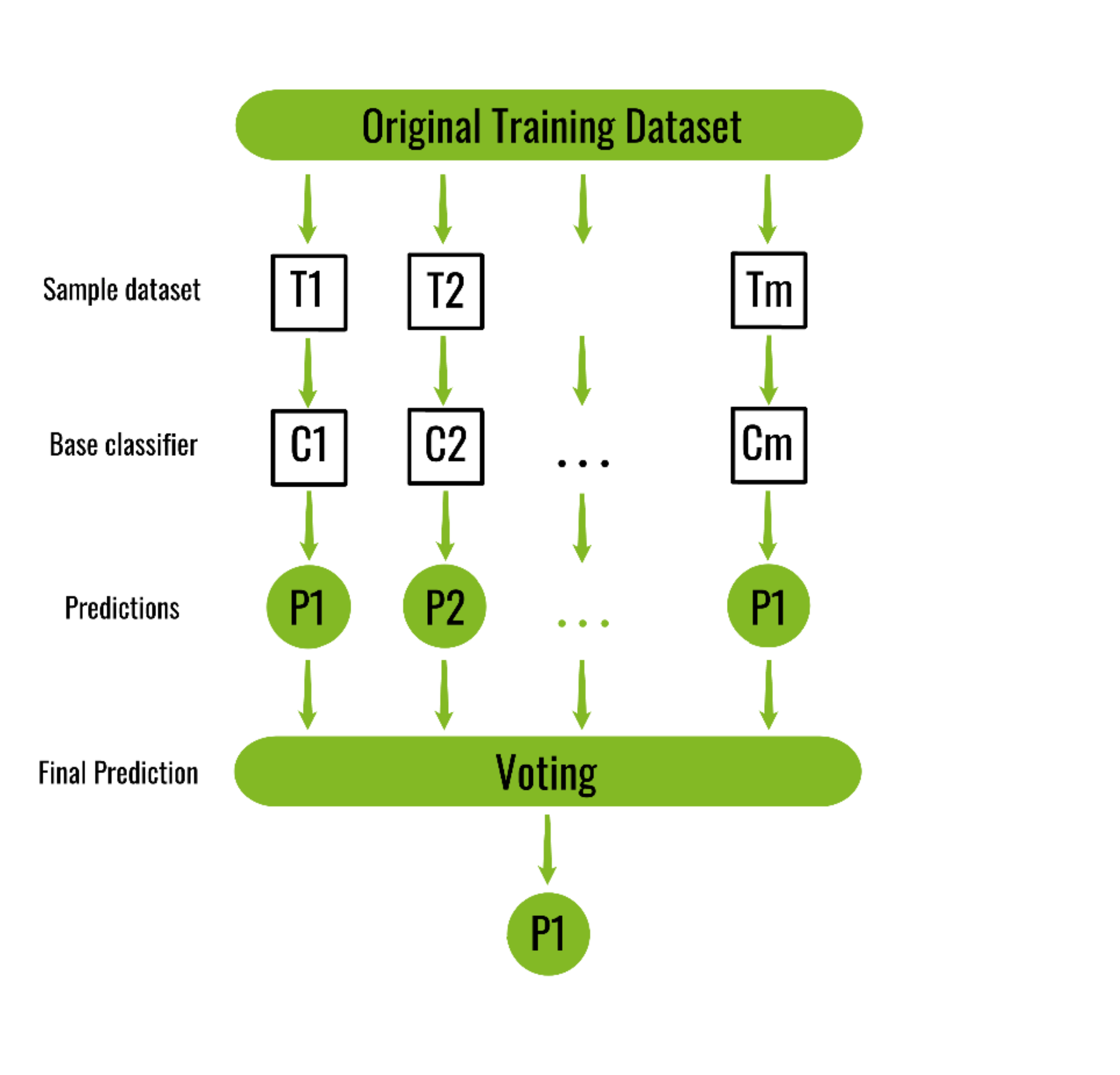

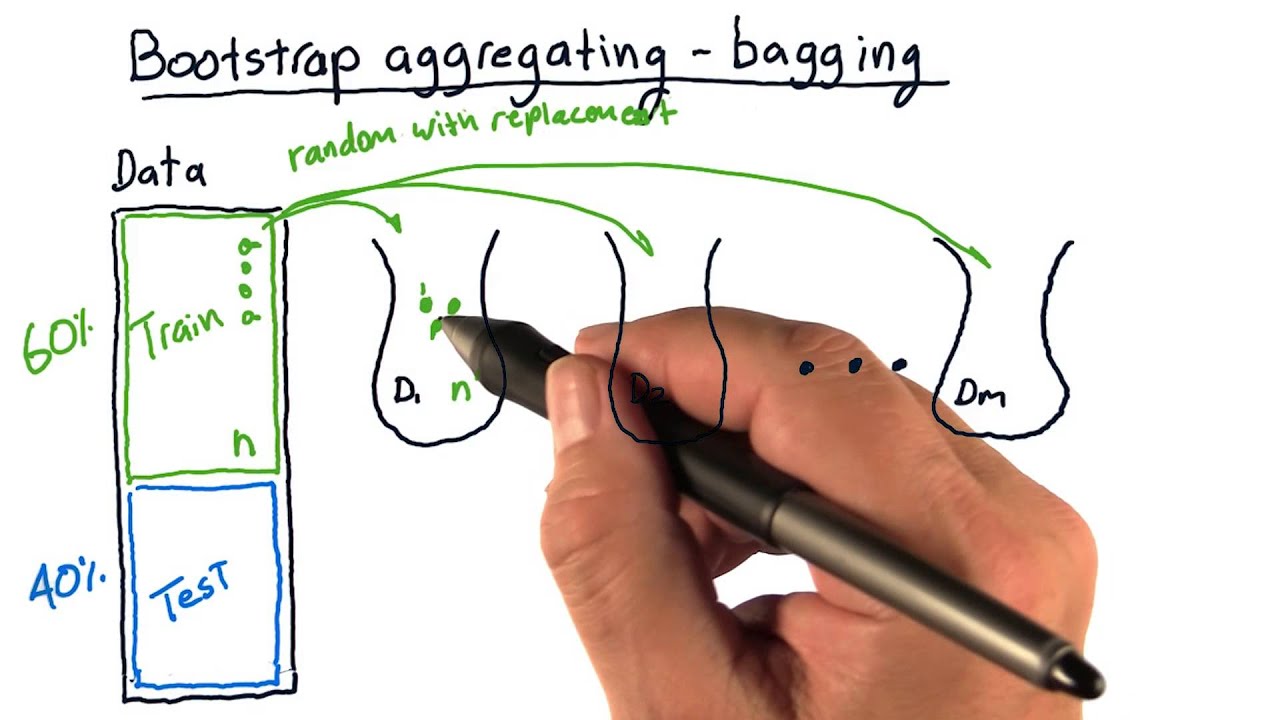

Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. Bagging is the application of Bootstrap procedure to a high variance machine Learning algorithms usually decision trees. This is Ensembles Technique - P.

As we said already Bagging is a method of merging the same type of predictions. It is a way to avoid overfitting and underfitting in Machine Learning models. Bagging appeared first on Enhance Data Science.

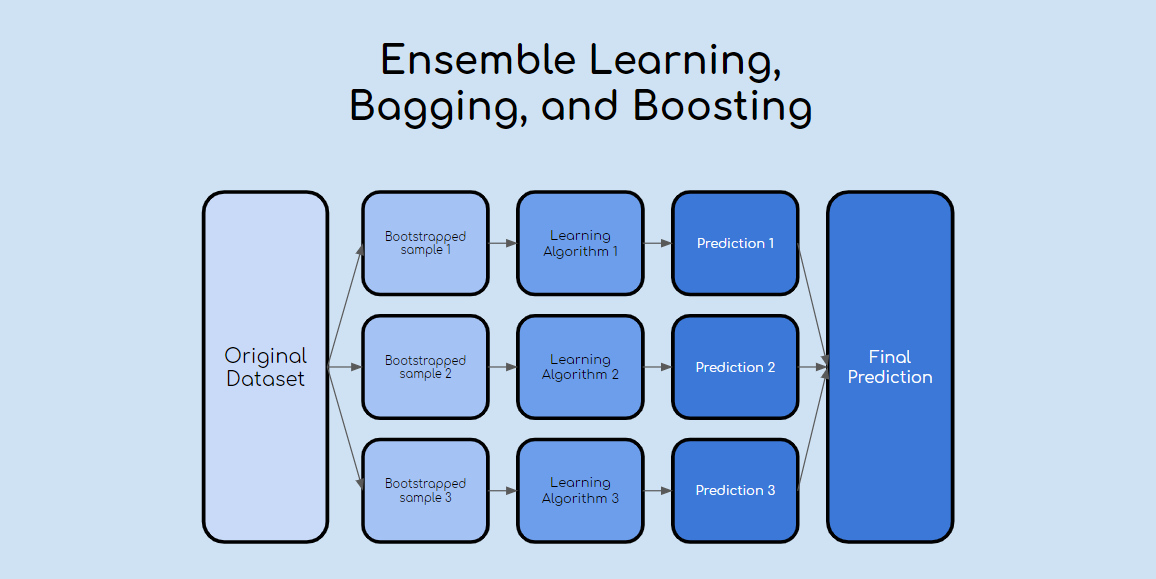

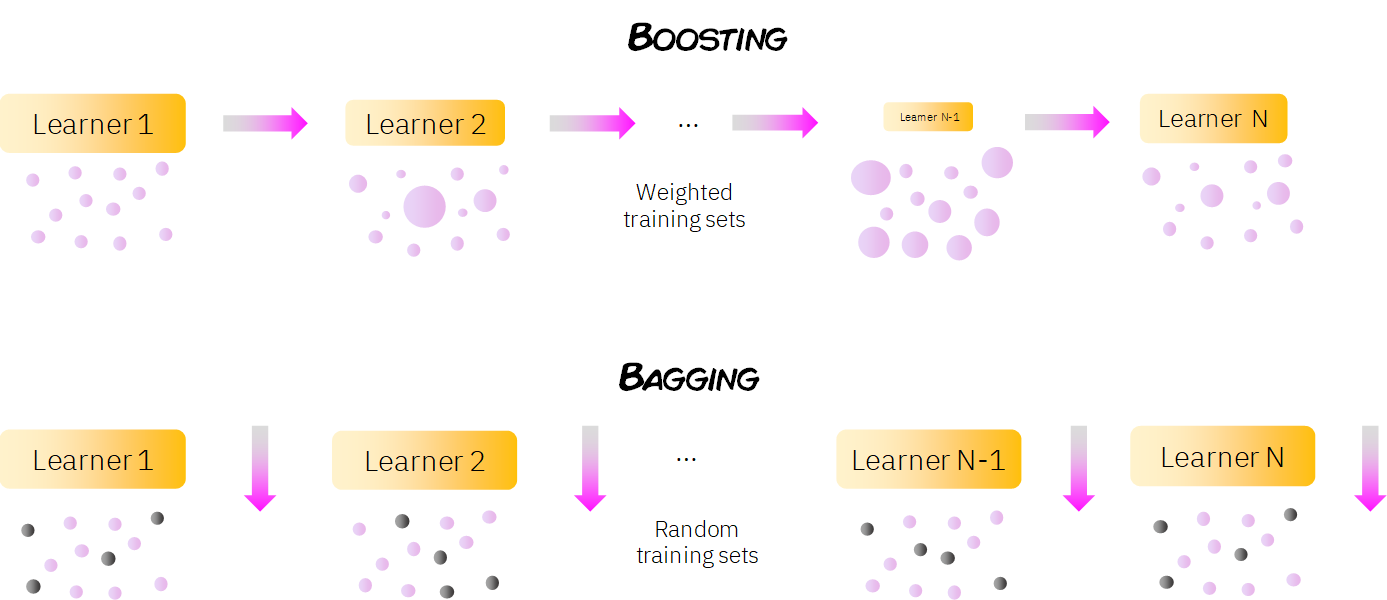

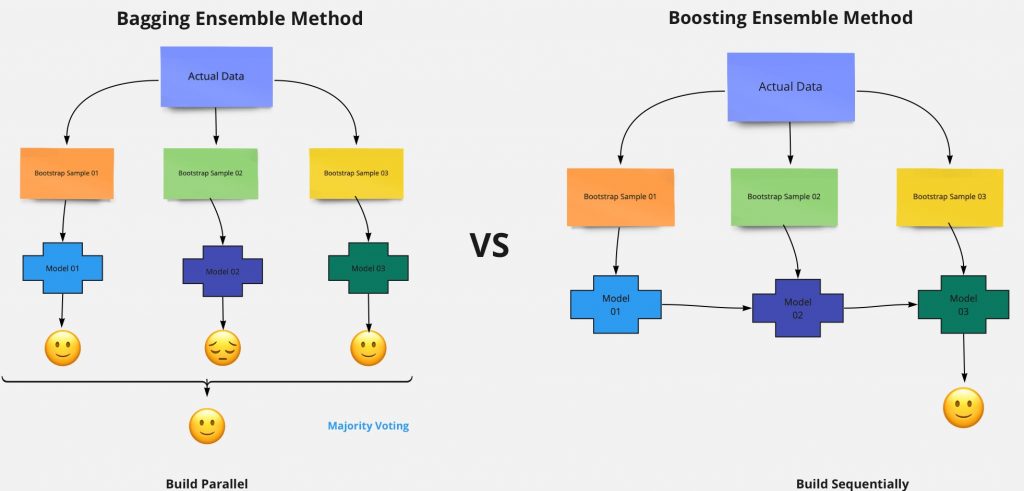

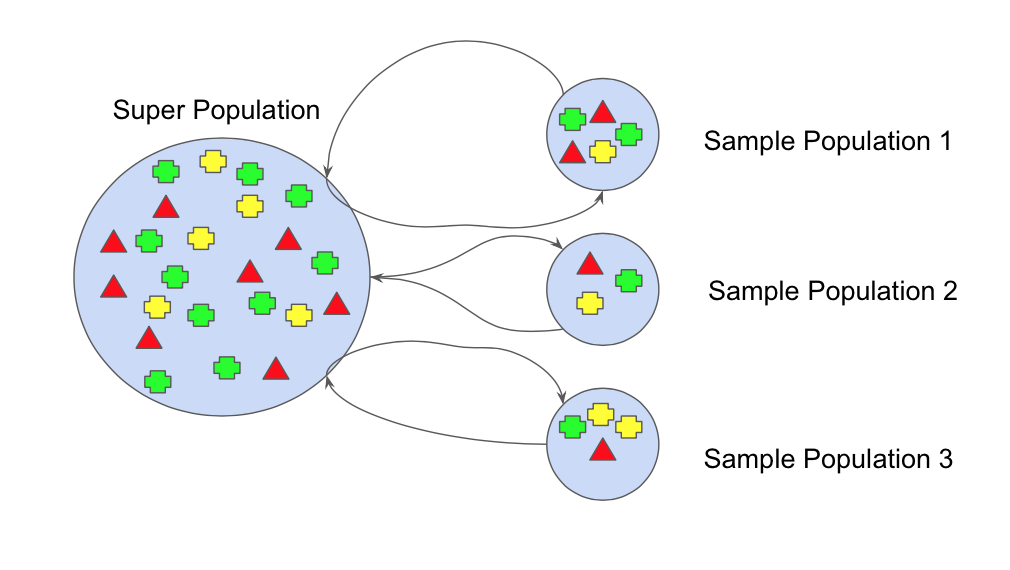

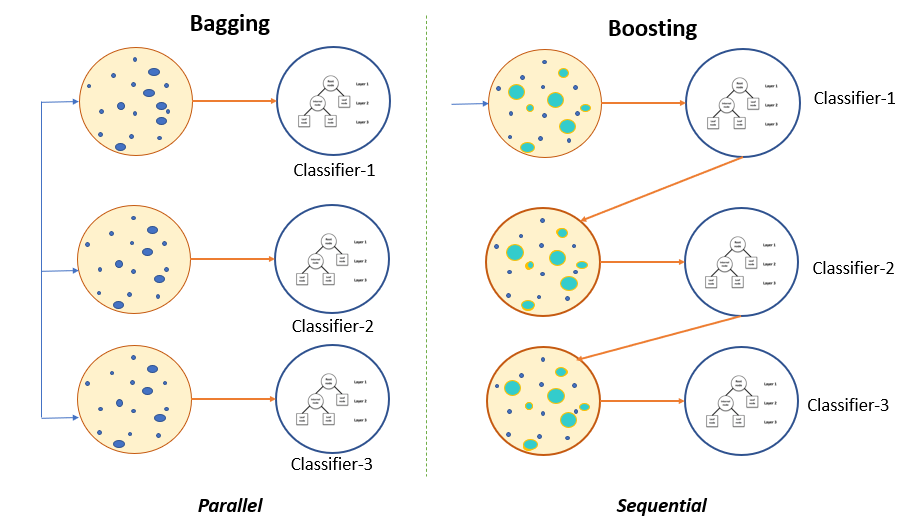

Ensemble learning is a machine learning paradigm where multiple models often called weak learners are trained to solve the same problem and combined to get better. Yes it is Bagging and Boosting the two ensemble methods in machine learning. The Below mentioned Tutorial will help to Understand the detailed information about bagging techniques in machine learning so Just Follow All the Tutorials of Indias.

Launch your career with a Machine Learning Certificate from a top program. Boosting and Bagging explained with examples. But let us first.

Boosting tries to reduce bias. Bagging also known as bootstrap aggregating is the process in which multiple models of the same learning algorithm are trained with bootstrapped samples of the original. Ad Andrew Ngs popular introduction to Machine Learning fundamentals.

Bagging and Boosting are ensemble techniques that reduce bias and variance of a model. Bagging tries to solve the over-fitting problem. Bagging also known as Bootstrap aggregating is an ensemble learning technique that helps to improve the performance and accuracy of machine learning algorithms.

The post Machine Learning Explained. If the classifier is unstable high variance then apply bagging.

Bagging Bootstrap Aggregation Overview How It Works Advantages

Ensemble Learning Bagging And Boosting Explained In 3 Minutes

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Boosting In Machine Learning Explained An Awesome Introduction

Bagging In Financial Machine Learning Sequential Bootstrapping Python Example

Ensemble Learning Bagging Boosting

Learn Ensemble Methods Used In Machine Learning

What Is The Difference Between Bagging And Boosting Kdnuggets

Mathematics Behind Random Forest And Xgboost By Rana Singh Analytics Vidhya Medium

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging Vs Boosting In Machine Learning Geeksforgeeks

What Is Bagging In Machine Learning And How To Perform Bagging

Random Forest Classification Explained In Detail And Developed In R Datasciencecentral Com

Ml Bagging Classifier Geeksforgeeks

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Bootstrap Aggregating Bagging Youtube

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium